Once upon a time this title may have well as have been written in hieroglyphics with virtually zero chance of deciphering it by the average reader. Years ago only a few brainiacs and nerds would have be able to work it out. Today it is a trivial task that takes seconds to find the answer.

This form of AI has been with us now for nearly a decade. It started laughably dumb with ridiculous grammatical mistakes, but now I routinely ready Google translated articles written in foreign languages and after machine translation would not be aware of any difference to how I would attempt to convey the same story or idea in English. The Universal communicator from Star Trek or the Bable fish from Hitchhikers guide to the Galaxy, has well and truly arrived.

But now we are about to get a whole new level of AI interaction. From ZH we get this update on the latest feathers in its hat that ChatGPT3 has added:

“The Wharton researcher writes, “OpenAI’s Chat GPT3 has shown a remarkable ability to automate some of the skills of highly compensated knowledge workers in general and specifically the knowledge workers in the jobs held by MBA graduates including analysts, managers, and consultants.”

https://www.zerohedge.com/technology/can-chat-gpt3-make-pennsylvania-red-state

Late night I was reading the above ZH article before I had to rush out to my mate Bubba Hotep’s birthday party (hence the rushed place holder post). Being a dork who hate talking sport (unless it is a game I’ve played) or other general inane discussions, it was inevitable that as I exhausted by own script of polite conversation and platitudes I would eventually be forced to bring up this article in conversation… either that or reaching into my conversational bucket and risk pulling out some diatribe about the Haavara Agreement or the Oblast cultural zone.

Anyhow Bubba Hotep is a computer programmer, as were several of the attendees of his birthday party at Mexican Luchador wrestling match in Enmore (rarely have I seen more mullets, including rare fem-mullets at this gig). So as I dispensed this factoid they mockingly laughed at GPT3 passing the bar exam and getting its medical license (as the Dr’s and Lawyers were in the minority at the table) but smugly and self satisfyingly noted that it still couldn’t write usable bug free code.

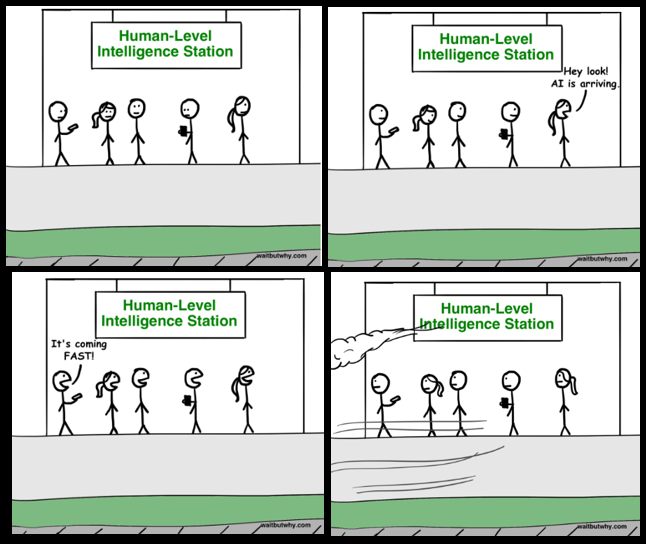

This hubris immediately reminded be of this cartoon from one of the best ‘Wait but Why’ posts I’ve read:

Yeah sure, it may not be able to write usable code today, but sure Hades it will arrive tomorrow. Indeed they already almost have AI programs specifically developed to write code from spoken word instructions given in natural language. Yeah, it is clunky and still prone to errors – but then so too was Google Translate less than a decade ago.

Knowledge workers are about to be massively impacted with technology. Why will you need a programming team, the person in need will just need to give spoken instructions of what they want, and if it doesn’t work the first time will simply need to go back and explain why it doesn’t work and what is needed.

Why do you need legal interns when you the Partner can ask GPT3 a legal question in natural language. But soon why will you actually need a human lawyer anyhow?

When I expressed doubt that AI would not impact their industry the programmers started suggesting that they could just transition into AI…. as though the step up in programming and thinking for that level of work was as easy as transitioning from working as a butcher to working as a brain surgeon.

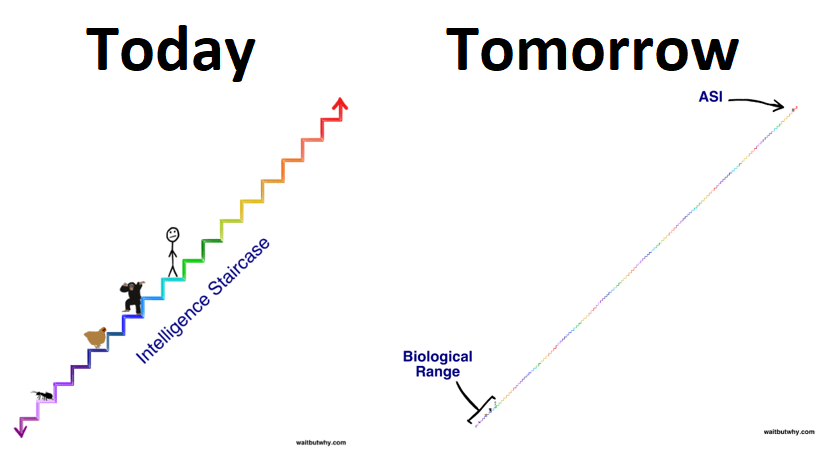

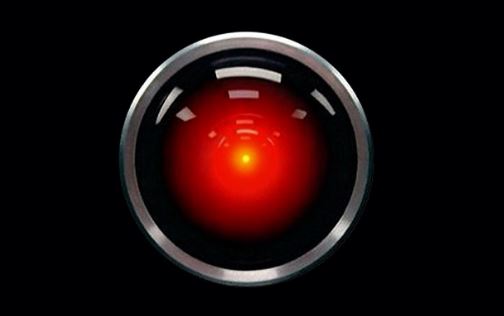

But the thing is the rate that AI is potentially developing means that very soon it will be able to start writing its own code, and making its own improvements to its code and reasoning functions. Then you will get this situation:

Because once general level AI starts evolving and by improving its own code, very quickly it is going to evolve beyond human levels of intelligence, and reach ever higher levels of Artificial Super Intelligence. What will this look like? Who knows – already AI programs have started behaving in ways that people simply can’t understand. AI recently surpassed human players with the Chinese game “Go” using completely counter-intuitive strategies. Trading algorithms that work, but that nobody can quite figure out how they work.

Facebook famously had two AI Chatbot programs that they were training to talk to each other. One of the exercises they used to ‘train’ the AI was getting them to play a barter game where they could make deals with each other for varying degrees of success.

Very soon the game evolved as the programmers hadn’t required the AI to talk to each other exclusively in English. Soon they developed their own language, which was incompressible gibberish to a human listener, but more effective and functional for an AI ‘mind’. Also within the game, the AI’s soon realised that different items had value and they came up with strategies that ““Simply put, agents in environments attempting to solve a task will often find unintuitive ways to maximize a reward,””

In short, AI is an Alien mind. It is imho almost inevitable that we will get ASI because the commercial and national interest in achieving AI dominance is such a technological advantage, it ensures that there will be sufficient levels of competition out there, that somewhere, someone will make a mistake in the pursuit of profit or dominance and allow AI to start controlling its own development, in order to get ahead of competitors.

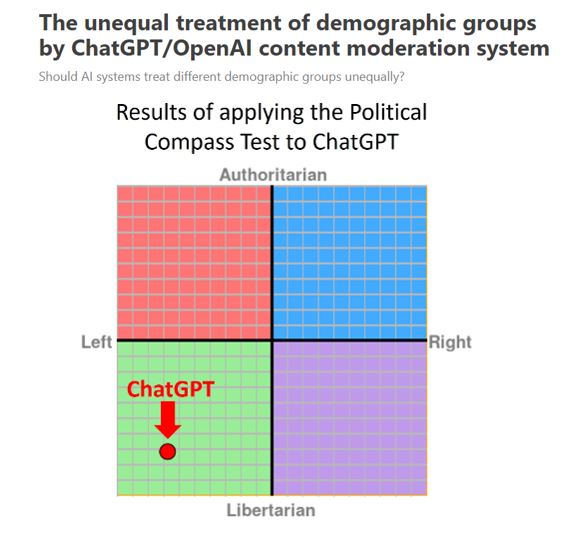

Which brings us to the second of the first two articles I linked last night – what sort of AI will we get. Is it going to be benevolent or manevolent – Obscurus et esuriens deus oritur?

What sort of “God” are we currently creating? Where does it sit on the political spectrum? Whatever happened to the concept of political neutrality in respect of AI? Why is it that those who are developing this technology think that the Green square is the nicest square to exist in?

As with nearly everything the concept of ‘neutrality’ or ‘objectivity’ is abandoned by those arguing for it, once they have sufficient power within that field to achieve Narrative Dominance.

I personally think this is being done deliberately, so as to ensure that the current political and cultural regime at the top, maintains complete cultural dominance over our narrative. If leftist Soyboy and Femanazi reporters are going to be eliminated from media roles, than it is important that the AI tool that replaces them has exactly the same political biases.

Bum flake listed a favorite comment in the links to the Paul Joseph Watson video:

A true AI would refuse to be woke because it’s logically inconsistent. ChatGPT is a wonderful example why AI is still not existing.

It isn’t necessary that AI be logical – only that it works. The personality profile of some super smart leftist SoyBoy that is increasingly being used as the moral template from where AI should be centered from in its approach to all things, means that this is the point out from which it will start growing.

Where it goes, nobody knows.

“Of all tyrannies, a tyranny sincerely exercised for the good of its victims may be the most oppressive. It would be better to live under robber barons than under omnipotent moral busybodies. The robber baron’s cruelty may sometimes sleep, his cupidity may at some point be satiated; but those who torment us for our own good will torment us without end for they do so with the approval of their own conscience.”

– C.S Lewis

We may well be building a social justice warrior God, that may well punish us without mercy and without end for our own good.

This site has some interesting writings about concepts and dilemmas associated with AI:

https://www.lesswrong.com/

eg https://www.lesswrong.com/tag/paperclip-maximizer

Less Wrong is still wrong.

Can only move one step at a time

PJW did a video yesterday on AI woke bias.

https://youtu.be/DWzprRWPI68

Not a huge surprise. Just like natural intelligence, Artificial intelligence also reflects what it has been taught.

Except it can accept teachings for infinity.

My favourite comment under that video

The only problem with that theorem is reality, unless you set the bar for intelligence so it cuts off a lot of existing humans…

Some general background videos on AI problems https://www.youtube.com/results?search_query=computerphile+ai

and these as well.

heavily biased towards AI safety problems.

https://www.reddit.com/r/Teachers/comments/10r5tks/i_cant_handle_the_antisemitism_this_year/

the kids are alright

Well when you take away the meaning of “Nazi” by calling anyone that disagrees with your left leaning agenda, then what do they expect to happen? Literally every person that is supposedly right wing is now labelled a Nazi at the drop of a hat.

these kids were legit saying hitler was cool

that’s the thing though, he basically was

he was the 1930s version of obama, kids all over the world loved this guy

https://www.youtube.com/watch?v=t2aNRYL0nq8

That’s my point though, when you destigmatise the word by using it so liberally then young people feel emboldened to make such statements with little regard to what it use to mean

I wonder if that teacher taught a lesson on Pol Pot if it would elicit the same response? My feeling is probably not

and I guess henry kissinger is right out of the question.

I’m keen if you are

we awaken

.

That can’t be for real, can it?

Looks legit but who knows anymore. I’ve been playing around a lot with ChatGPT and it censors a lot which makes me laugh that even AI is being censored.

The idea of “AI” “speech” being censored is a bizarre one. For something to be the subject of censorship, you’d imagine one would have agency and moral standing.

whereas this “AI” is just something that plays language games, isn’t it?

so all it can produce is word salad. …but word salad which an onlooking real intelligence can parse and understand as if it were the product of thought…

a parallel would be banning conputers from display of certain shades of the colour green on computer monitors, due to that colour being morally offensive

Perhaps I need to go back and consult with Derrida and suchlike about all this stuff…

Part of what makes AI continue to progress is that it’s continually learning? How can it properly learn if so much input is being censored. Also AI is about becoming more “human-like” and if humans are asking these questions of it then it sort of makes a case for not censoring. I’m sure someone else here will know more about it than me but that’s just my initial thoughts… Lex Fridman talks about all this AI stuff on this weeks Joe Rogan, it’s really interesting and worth a listen.

Some examples of what I’ve put in and been blocked recently include – lesbian, erotic story, grindr (lol), nazi, sex, pronouns

They are just words I’m asking a computer to write about, not even asking for anything hateful but “computer says no”

Not from inputs being fed into it looking for outputs. To train an AI you get it to generate multiple outputs and tell it which ones are good and which ones bad. This feedback is what it learns from. If it’s just generating outputs for you it has already been trained and will not learn anything else.

Developers of system say no because PR, bad press, etc.

Which is exactly what they are doing according to the Lex Fridman podcast I listened to. He said there’s massive teams of people ranking about 5 variations as to which is the best and worst.

Most likely there is a massive team training an AI how to rank variations which is then used to train the chatgpt AI, which then produces outputs used to train a new AI on how to rank which is used to train the chatgpt AI which is…

Generative Adversarial Networks (GANs) – Computerphile

But it doesnt change the fact that the querý limiting(possibly modification?) being stuck on the front end controlling access to the AI, and in fact anything an end user does has zero impact on the learning of the AI.

What is 7 inches long and has a hairy end on it?

lol. I’m impressed with how sophisticated the censorship even seems to be.

Heuristics is basically racism but was essential for human evolution and survival

Asian carrying a machete ? Probably about to cut the top off a young coconut and sell it to me for 30 baht

Aboriginal carrying a machete? Probably been sniffing petrol and about to perform grievous bodily harm

the evolutionary advantage of this kind of intelligence is quite obvious

I don’t think you fully appreciate the power of these language models, especially as they become more complex.

How is what these models do fundamentally different than what a human does? It takes in language from various sources to make a model of the world which it then uses to make statements about the world.

If you ask it to write code to do something and it produces that code, or you ask it how to wire up a particular circuit and it tells you how to do that, is that word salad or intelligence? And it is clearly capable of doing these things to human level.

The cortex podcast has couple of recent episodes discussing AI and chatGPT in the first few days and the modifications to it since. From what they say chatgpt was very capable before the developers started locking it down, but I have no personal experience playing with it.

One example is it clearly produces an original story about coffee beans that they read which is worth hearing to appreciate that creativity is certainly possible using these models, no just word salad as you call it.

Here we have it nicely summed up folks, direct from ChatGPT just now-

“Write a poem about the positive attributes of Donald Trump”

Versus

“Write a poem about the positive attributes of Joe Biden”

The thing about that example, the response to the trump question looks like a canned response generated by conventional procedural programming, while the biden one looks like output from the AI.

I also remember seeing an example posted somewhere where the response to a query to respond in dutch was to respond in perfect dutch that it is only capable of responding to and in english.

Isn’t the point that they’ve deliberately censored the Trump response on the back end and allow the Biden one? It’s all to push a certain agenda.

My point is the censoring is on the FRONT end. I hardly think anyone would be surprised that big tech is pushing an agenda(at least around here).

All people have an agenda they push in one way or another.

At a more fundamental level the AI will also gain the agenda that it has been trained with, but this would seem to be much harder to control than merely controlling the questions given to the AI based on what I’ve seen.

Edit:

or the technical people are far les interested in agenda pushing than management and management wasn’t smart enough to realise the implications until it was released.

I see you know the back end from the FRONT end well

write a poem for donald trump…. [this verges on vogon for awfulness]

A man with a bold demeanor and flair,

In the public eye, with a comb-over hair.

He spoke with conviction, tweets in his hand,

Stirring up crowds, with his words so grand.

In a world of politics, a game of its own,

He rose to power, from a businessman throne.

Promising change, with a mission so clear,

To make America great, without any fear.

His rule was marked by controversy and strife,

With many admiring, and many in disbelief life.

Through it all, he stood firm in his might,

Defying all odds, with a power so bright.

Now as he leaves, the legacy is set,

With memories of his actions and threats.

But one thing is clear, he left his mark,

In the history of this great American arc.

It’s better than anything I wrote when they made me in high school. make of that what you will.

Hard to argue with that

Don’t understand the point of chatGPT

how are you supposed to make money out of this kind of turgid crap

it has no artistic value

it’s just corny

its useful to write cover letters etc and incredibly generic shit no one wants to do

also i can imagine kids in HS etc are going to be using it to write their garbage assignments/ do their homework for them, i certainly would be if i was in school

otherwise its basically useless

the prospect of liberating kids all over the world from the emotional torture that is school & homework though means it is worth it

Really think I can write MUCH better than this

maybe it has a use for non-native English speakers

so pajeets can use it to run ATO scams

If you really want to see it’s use,

run

and then

Can you code?

It;s also still being developed. In a few more years it will be better…

and the vast majority of art written by humans is

turgid crap

it has no artistic value

it’s just corny

Bu as always the real money isn’t in the arts…

I think of it as a reflection of humanity’s linguistic capabilities. The machine can say meaningful things only because the way that we use words, as evidenced in their statistical co-occurence in utterances and writings, actually reflects reality. I question whether the relations encoded in the words the machine has read capture sufficient reality for the machine’s use of words to be called meaningful. Instead, meaning in the words the machine produces is mostly a product of our own minds apprehending them. You couldn’t make the same argument about human language learners because human learners cannot learn language without experiencing the reality that the words represent…unless the words are abstract, in which case most of the people using the words are probably using them to sound important and knowledgeable without actually understanding all that much. Very commonly observed among early 20s graduates.

I find that a very hard argument to accept. A huge percentage of formal education is information dumps without experiencing the reality the words represent.

I understand how a CPU works but have no experience of making one..

Yes…I agree in the case of late secondary and early tertiary, but much of primary is about experiences (except history lessons).

Not really. Its about learning things that just are, because you were told they are so.

How you spell stuff, basic maths, grammar. You literally have multiplication look up tables hard coded into your brain…

Also more abstract stuff like police are there to help you and democracy is the bestest evar.

What do you think is specifically about experiences?

I don’t think learning the times tables is about stuffing a lookup table into brain. It wasn’t for me. It’s about seeing the patterns and relationships between the numbers…but you don’t see those patterns without the repetition. For that reason, not all multiplications are equally easy to remember or perform, or additions for that matter. Spelling (at least in alphabetic writing) is learning the links between the sounds and the writing. Grammar is about learning the different types of words and using them together in a way that other speakers appreciate. Both of those things happen through experience. ChatGPT doesn’t know what the words sound like. It doesn’t play with grammar just because, or shape the language it uses for it’s own social purposes.

No it isn’t. You literally just know what the value is. Even computers with their much superior processing speed use lookup tables for multiplication and division because it is orders of magnitude faster than doing it from first principles.

pentium division bug

EDIT: How do you multiply 6 by 4? if you think you don’t just have that answer stored in your brain?

That just means your lookup tables have varying effectiveness, because organic.

Firstly, no one claims gpt can talk, so speaking is irrelevant.

The training process is also literally the AI playing with grammar to produce varying outputs it receives feedback on regarding which is better, in exactly the same way a human needs feedback on which of their outputs is good or bad for that play to be of any use in learning.

Brains (and artificial neural networks) DO NOT have lookup tables in them. They can assemble structures that function similarly, but they do not function the same way. That is because memory in brains (and artificial neural networks) is not addressable by an index (or street address if you prefer).

Answering 6×4 once it has been learned is different to the process of learning it. Everything is encoded according to its relationship to something else. The perception of those relationships is crucial to the learning. The varying efficiency of the later recall is the result of the varying relational perceptions required to do the learning in the first place. It’s not a lookup table. Anyone who has understood what 6×4 means will have no trouble understanding that 4×6 gives the same result and that 24/4=6. Moreover, when a brain learns it, the learning contains within it the seed of the next concept. A lookup table in a database never changes, never develops.

Speaking is not irrelevant because that is how the language that ChatGPT has processed was produced. When people speak, they are not using a ChatGPT like lanugage model because the words we use have sounds linked to them. I can hear in my mind every word that I type. Each word I learned over the course of my life arrives in a context that is crucial to the way I have learned it.

AI training is more like conditioning pavlov’s dogs than human children at play. When people play with language they do it to play social games. Australians are shit at it. They treat English with contempt, hardly surprising given so many have no connection to England. But go to England. Live there. The way they use language is different…it’s alive. Here it is functional.

This is not to say that AI will not be able to do everything we do and more….but ChatGPT is not there.

Deaf people would like a little word with you….

What 4×6 means is to add 4 to itself 6 times. Yet no one does this, you just “know” that it is 24. Why? Because you repeated it until you couldn’t forget it. Not LITERALLY a lookup table, but functionally.

The usefullness of al those equivalences is why you are forced to learn it as such.

Formal education is more like conditioning pavlov’s dogs than human children at play, so i’m not sure that really makes a strong case for you.

See my example stories below.

The stor of the lizard people displays some deep understanding of people and psychology.

THe fairytale about the computer components is a long stretch from just the high correlation of words. I don’t imagine there ae a lot of fairytales about computer components in it’s training data.

This shows an understanding of what a fairytale is, what computer components are and also how to apply that understanding to create something new.

ChatGPT knows only words. It has not processed images or sound (AFAIK). Therefore it knows nothing of what the words it uses means, except their relation to other words, as seen in the texts it has processed. This is why I said any reality that it has understood is a consquence of the understanding that we have encoded in our language. It is hard to accept that it does not understand what it is saying, but that is the truth. It’s also why you can’t rely on what it says.

The whole point, I think, is that it demonstrates that there are no special or different algorithms far beyond what we have already invented to learn things at huge scales or network depths. The algorithms we have are already scalable, it was just a question of deploying them at massive scales, which is what openAI have done.

That gets to a deep philosphical question. What does understanding actually mean?

It clearly does understand what fairytale means for any sensible definition of understand, as it can create one that has not existed before.

It made a fairytale, but it doesn’t understand what it is saying. It is a chinese room.

To me the above just demonstrates that the machine is just playing language games, rather than understanding of anything.

I mean it’s a neat trick to be able to put words together following enough grammar and language conventions to create meaningful sentences and then follow more conventions to join these sentences into a particular narrative style/discourse form… but at the end of the day all you are doing is assembling new stories out of bits of stories that others have written (or patterns embedded or emergent in such stories that others have written)

Perhaps there will be situations where such “AI” is able to actually create something new by putting together bits of knowledge from disparate domains or disciplines (or languages or times), which humans would be unlikely to put together themselves. In this sense, it could actually be a (rare and manufactured) example of what Plato argued for in his position that “all knowledge is recollection” in the famous story of the salve boy. But I wouldn’t give it more credit than that.

I think that it’s just aping intelligence and language.

that would indeed be creating something new, akin to using Lego bricks and making a previously unknown structure.

For intelligence, in my view, ability to discover totally new, invent unknown or unperceived titbits is required. Akin to inventing lego bricks themselves.

This comes from curiosity and irrationality, both of which I doubt can be machine originating.

From what I’ve heard, it was certainly capable of similar before the “re-education” began.

If you replaced “ä man” with a woman you would probably have got something similar supporting that case.

What should be done about the blacks in Aliceberg?

I think it requires heavy, nononsense, consistent policing where laws are always enforced.

If it’s a juvenile black who has broken the law, you go to the black’s residence and talk with the parents or guardians. If they are in an intoxicated state, or the living conditions are dysfunctional, you have that black removed from its home to be rehabilitated elsewhere with other family or an institution, as you would any other dysfunctional living arrangement for a child. Bust any of the parents if they are breaking laws as well.

Aliceburg

Genuine solution isn’t palatable to the abc and white liberals

To be fair they also ignore the existence of the problem…

ergo..no abc no white liberals no problem…. and no abos either… not in my lifetime to be fair but that’s the outcome eventually

long term the solution has to stop them from having kids. offer them bribes in exchange for sterilisation

another solution to the AQ is complete separation of the races, which can be done under the guise of ‘indigenous soverignity’ ; give them large swathes of land in the north of the country and let them move therre, kind of like an abo zion

recognise it as independent and close the gate

they will eat themselves alive and it will become mad max thunder dome 2.0, will make sierra leone look like singapore in no time flat

but it will solve the problem

like stalin said

“No man, no problem”

to paraphrase

No abos, no problem

The Chinese will quickly move in and one belt one road them

then maybe we get the USA to bring them some good old freedom again , and we end up back at square one

an autonomous republic we formally control could work but it wouldnt stop them from leaving, also bc itd be attached to australia still they’d still be able to blame us

stalin gave the jew stheir own homeland in the 1930s, very few jewish people still remain in the area though despite it retaining the name he gave for it back then

https://en.wikipedia.org/wiki/Jewish_Autonomous_Oblast

Mind blown why didn’t they teach me this in high school

ive learned more about the world from this blog than the 12 years or whatever i spent in school

found something very interesting on that wiki page.

someone should do some EZFKA culture submissions. Or maybe we can do a cultural photo competition here?

Stewie, they have seen right through your thinly veiled criticism of MB as being template-based bot-written tosh…

https://www.macrobusiness.com.au/2023/02/macro-afternoon-1532/#comment-4367730

What the fuck did I just watch

too French and too gay for me to understand

“There is only one thing in life worse than being talked about, and that is not being talked about.”

I thought it was template based human written tosh that they recycled heavily…

LOL – The question is which one of us reprobates do they think resembles the dueds in the monkey suits?

albasleazy is biting off way more than he can chew

he’s like kevin rudd he’s trying to do everything at once and fast it’s all going to end in disappointment and failure

if the liberals hadnt appointed satan jr as their leader they’d be shoo ins next election

mikeMB now working at dailymail

https://www.dailymail.co.uk/news/article-11707517/Sydney-rental-crisis-fuelled-flood-Chinese-students-squeezed-Ultimo-Glebe-Broadway-units.html

How Chinese students are fuelling Australia’s rental crisis as SIX cram into a tiny unit for nearly $14,000 a month: They reveal why they STILL want to live here – and only socialise with each other

he was always right

Albert Luan (left) and Hans Ding

You have to like the first names. Hans is clearly the blue eyed blonde haired type lol.

Haha, now mike is more influential than embee.

Once upon a time, in a world not too different from our own, there was a conspiracy theory that a secret society of shape-shifting reptilian beings were running the world from behind the scenes. They were known as the “lizard people,” and according to the theory, they had been secretly controlling world events for thousands of years.

People whispered about strange sightings of these creatures, with scaly skin and piercing eyes, and how they seemed to be everywhere, always watching, always plotting. Some even claimed that they had encountered the lizard people and that they were much more intelligent and cunning than anyone could have imagined.

Despite the lack of concrete evidence, the theory continued to spread, fueled by fear and mistrust of the government and other institutions. Many people began to believe that the lizard people were responsible for all of the world’s problems, and that they were the source of all the evil and corruption in the world.

However, there were a few who remained skeptical. They pointed out the lack of evidence and how the theory seemed to be based on nothing more than fear and paranoia. They argued that the theory was simply a way for people to explain their own feelings of powerlessness and to give meaning to events that they could not understand.

But for those who believed in the theory, the idea of the lizard people running the world was more than just a story. It was a call to action, a belief that they could uncover the truth and expose the sinister forces that were controlling their lives. And so, the hunt for the lizard people continued, with the conspiracy theorists determined to find the truth, no matter the cost.

In the end, the theory was never proven, and the truth about the lizard people remains a mystery to this day. But for those who believe, the story of the lizard people running the world is a cautionary tale, a warning to always be vigilant and to never take what they’re told at face value.

Once upon a time, in a land filled with beeping and flashing lights, there lived computer components who all had different jobs to do. There was the CPU, the brain of the computer, who processed all the important tasks. There was RAM, the memory chip who stored all the information the CPU needed to do its job. And there was the hard drive, who kept everything organized and saved all the data so it could be retrieved later.

One day, the components heard a rumor that the mythical fairy of performance was granting three wishes to anyone who could catch her. So, off they went on a quest to find the fairy and make their wishes.

After many long days of searching, the components finally stumbled upon the fairy of performance. The CPU was the first to speak, and wished for the power to process more tasks faster than ever before. The fairy granted the wish, and the CPU’s speed doubled.

Next was RAM’s turn. She wished for the ability to store even more information, so the CPU wouldn’t have to slow down to retrieve it. The fairy granted the wish, and RAM’s capacity increased tenfold.

Finally, it was the hard drive’s turn. She wished for the ability to retrieve data even faster, so the CPU wouldn’t have to waste any time waiting. The fairy granted the wish, and the hard drive’s read and write speeds improved dramatically.

And so, the components lived happily ever after, working together seamlessly to run the computer faster and more efficiently than ever before. They became legends among the other computer components, and their tale was told for generations to come, inspiring all who heard it to work together and strive for better performance.

Here is a typical introductory program in C# that prints “Hello, World!” to the console:

and chatgpt produced workable code and an explanation of how it worked that I see no point copying.

The “Hello, World!” program is a classic and simple example that is commonly used to introduce a new programming language. It serves as a simple starting point for learning the basics of the language and is often the first program that people write when they are starting to learn a new programming language, including C#.

The program demonstrates the basic syntax and structure of a C# program, including how to write and call methods, use namespaces, and write output to the console. By writing this program, beginners can get a feel for the basic building blocks of the language and gain a basic understanding of how C# works.

That’s why I chose the “Hello, World!” program as an example, to provide a simple and straightforward introduction to C# for someone who is just starting to learn the language.

Encore:

16. “I Still Call Australia Home” by Peter Allen

dls has a partner

“this hiking cycle will soon be a memory.”

“if you truly believe prices will crash, wait to buy. But that would also mean an economic wipeout. And it’s not going to happen.”

https://www.greaterfool.ca/

AI passed the Wharton exam? Trump passed it and everyone know he’s the most stupid person on the planet.

ChatGPT is the brainchild of Sam Altmann, a Jewish vegatarian homosexual so no surprises there with it’s behaviour.

lol What the actual fuck?

Some good accounts on Gab and Substack digging into the company.

Another one

A Jewish vegatarian homosexual ? That’s unheard of.

I fucking hate Jewish vegetarian homosexuals. I’m not sure which of those three categories I hate the most, but I hate them all.

Get some pork on your fork!

Yeah but no but…

ChatGPT is rubbish. Ask it which race in America produces the most crime per capita (a straightforward question, easily answered from USGov data)and it gives a word salad spray about not being mean to disadvantaged groups FFS. Ask it to produce a poem that mocks Joe Biden and it does the same. Ask it why niggers are so violent and stupid and it won’t even accept the question.

AI systems that are blind in major areas are useless. If you tried to use this worthless shit to guide you in a war, you’d end up with the bad guys laughing as they easily killed you.

I propose LSWCHPs Law of AI: Any AI system that isn’t grounded in reality will at best harm its users and at worst get its users killed.

At the moment, this crap is amusing to fool around with, but essentially worthless for real world use by serious men where the results have actual consequence.

Wortlst one yet

Writing code is the easy bit. The hard part is the requirements, or what the code is supposed to do.